Not long after the discovery of alpha waves in the human electroencephalogram (EEG), a composer named Alvin Lucier used them in music performance. The piece Music for Solo Performer (1965) amplified these brain signals to excite percussion instruments.

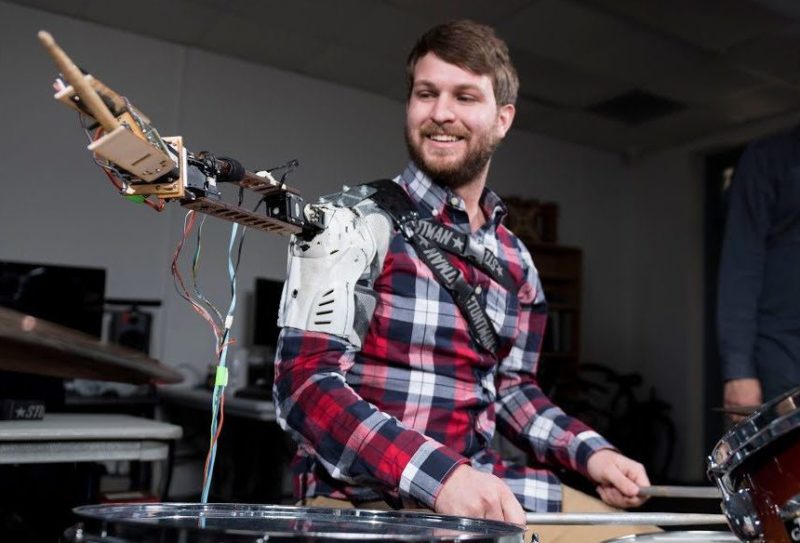

Neurofeedback and biofeedback instruments have interested me since working at the Input Devices and Music Interaction Lab (IDMIL). But it was not until I was a student in Gil Weinberg’s Robotic Musicianship Group, that I had the opportunity to use them to make music. As I discovered first-hand, the signals are notoriously noisy and difficult to control. I needed to be creative in how I used them.

I learned a lot of what I know about EEG by teaching others. My first EEG instrument was made in co-design with undergraduate students in the interdisciplinary Vertically Integrated Projects (VIP) program. After a period of critical thought and discussion, I taught them EEG signal processing, EEG acquisition, audio-coding and design. They used these skills to make the instrument, which we performed performed publicly in 2017.

Invited Talks

- Winters, R. M., Culwell, C., & Bright, T. J. (2017), Brainwaves and Music (2017), Moogfest, Durham, NC.

- Hsu, T., & Winters, R. M. (2017), New Instruments, Interfaces and Robotic Musicians, Moogfest, Durham, NC.