So much of the content in social media platforms is visual: GIFS, photographs, memes, infographics, bitmojis and emojis to name a few. Sound and music are such vital components to the enjoyment of media, but have been largely overlooked.

I joined Microsoft Research in the summer of 2016 for an internship with researchers in computer vision and accessibility. We explored novel ways of transforming social media—especially images—into rich audio experiences for the blind.

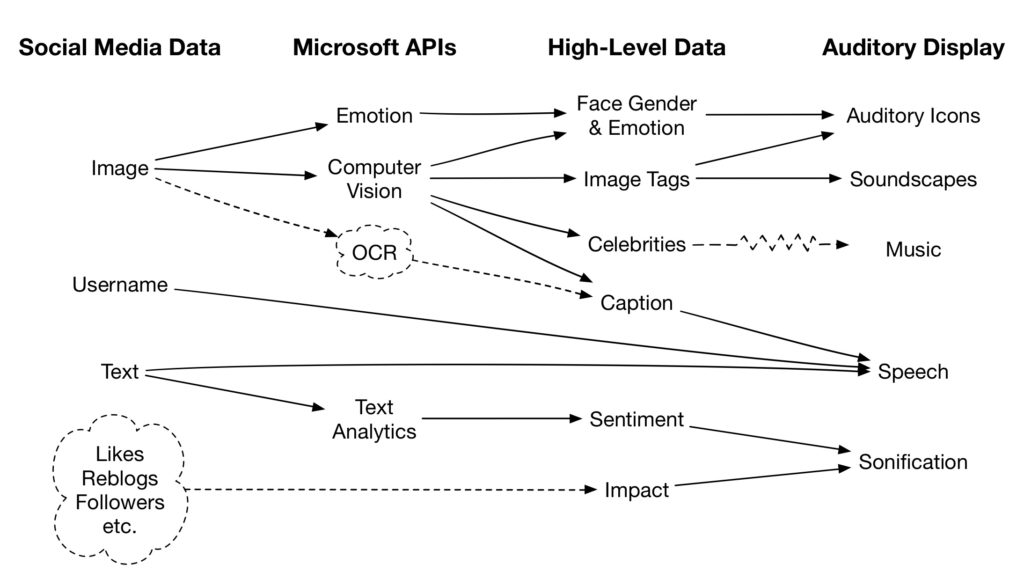

Image sonification systems typically use low-level data such as RGB-values as the basis for their audio-mappings. However, using Microsoft Cognitive Services (AI), I was able to extract high-level features such as people, sentiment, emotions, environments and objects. This made it easy to craft soundscapes that actually sounded like the images.

I ultimately settled on a design that used speech, sonification, auditory icons, soundscapes and music. I had to be smart about the way I arranged them so that people could hear everything without adding too much extra time to the browsing experience. I hope that these types of technologies start finding their way into apps soon. So much of the visual content on the web is still unaccessible to the blind.

Publications

- Winters, R. M., Joshi, N., Cutrell, E., & Morris, M. R. (2019). Strategies for Auditory Display of Social Media. Ergonomics in Design, 27(1), 11–15.